RESEARCH HIGHLIGHTS

Our research highlights serve as a collection of feature articles detailing recent scientific achievements on GCS HPC resources.

Researchers Turn to Neural Networks to Advance State-of-the-Art in Turbulence Simulations

Research Highlight –

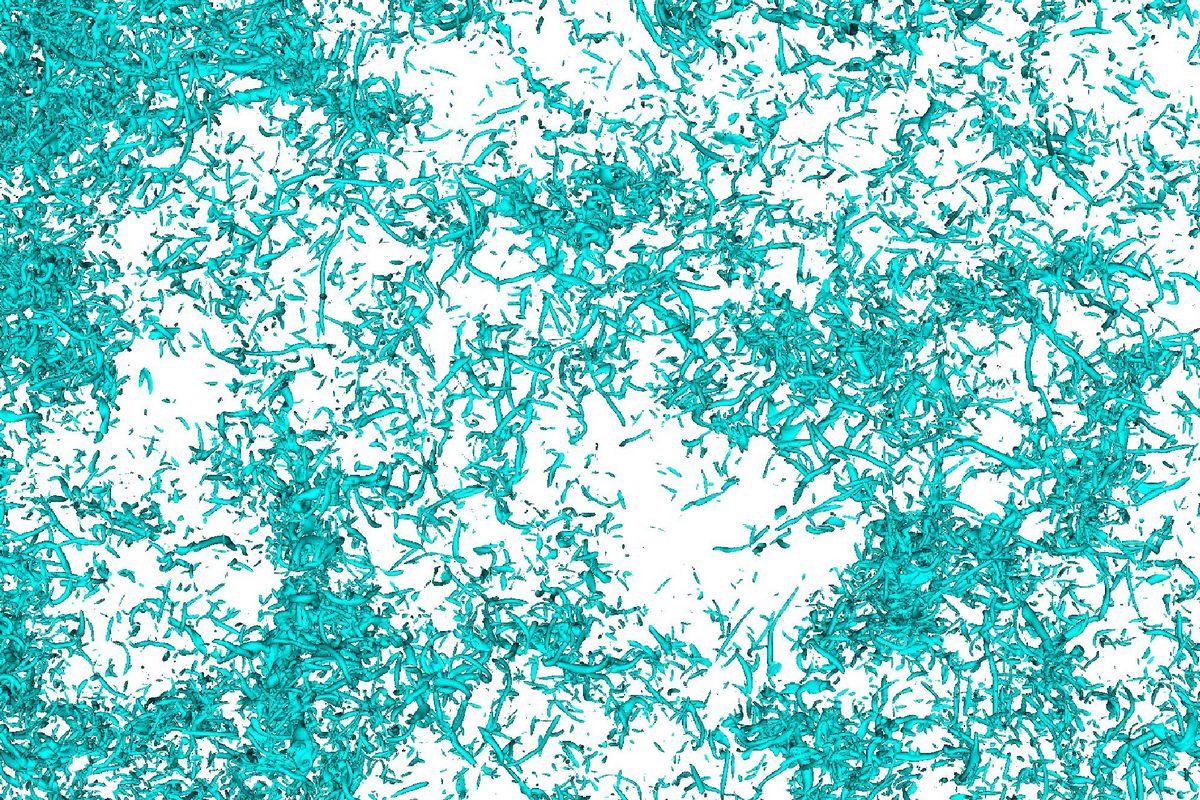

Perhaps no other problem has vexed scientists and engineers in the modern era as much as the quest to attain a comprehensive understanding of the small- and large-scale dynamics of turbulence in fluid flows. Turbulence impacts everything from fuel efficiency in cars and airplanes to global weather patterns, and while researchers have spent decades learning how it functions on a fundamental level, a deep, foundational knowledge of the small-scale dynamics that influence turbulent motion continues to evade our understanding.

In recent decades, high-performance computing (HPC) has become an indispensable tool to better understand the complex choreography of fluid motion that underlies turbulence. As computing power has grown, researchers have gone from rough models of turbulent fluid motion that include a host of simplifications or assumptions to so-called direct numerical simulations (DNS) that calculate the motions of individual parcels in a fluid. While DNS is accurate, it is also computationally demanding, meaning researchers need access to world-leading computing resources to solve the governing mathematical equations in a reasonable amount of time.

However, even on the world’s fastest HPC systems, DNS of turbulent flows encountered in nature and engineering is impossible. In recent years, researchers have a relatively new, powerful tool at their disposal to help improve their ability to understand turbulence at a fundamental level—the rise of artificial intelligence methods, such as designing deep neural networks, has opened a host of new opportunities for advancing the computational state-of-the-art.

To that end, a team of researchers led by Dr. Dhawal Buaria at the Max Planck Institute for Dynamics and Self Organization has been using the JUWELS supercomputer at the Jülich Supercomputing Centre to train neural networks that can be employed in the service of understanding turbulence at a fundamental level “In tackling the formidable challenge of turbulence, there is an imperative need for a computational breakthrough,” Buaria said. “Neural networks have enabled a revolution across scientific disciplines, so it is natural that researchers are applying them to harness the complexities of turbulence.”

Using JUWELS, Buaria and his collaborators were able to train a neural network to capture the small-scale dynamics in turbulent fluid systems at lower Reynolds numbers—in essence, the ratio between the inertial forces that drive the fluid flow to the viscous forces which oppose the motion—and then predict the behavior at higher Reynolds numbers. The team published its results in PNAS.

Reaching new, complex heights

When talking about improving the accuracy of a turbulence simulation, most researchers mean they want to simulate turbulent fluid flows at higher Reynolds numbers.

“Reynolds numbers represent the degrees of freedom that are included when we run a turbulence simulation, which essentially demonstrates the scale or complexity of the problem,” Buaria said. “In order to do a perfectly accurate simulation of a turbulent fluid flow problem, we must resolve all the degrees of freedom.” In DNS, researchers break a simulation into a fine-grained computational lattice, or grid, then solve physics equations in each “box” to chart how particles move and interact, advancing time by microseconds or milliseconds to catch short-lived interactions. Resolving those degrees of freedom means that researchers must solve equations for each particle’s movement and interactions with other particles for each small step forward in time.

While solving all degrees of freedom at low Reynolds numbers is straightforward, Buaria and his collaborators—and indeed many in who study turbulence—are focused on much larger systems at high Reynolds numbers. Buaria indicated that the degrees of freedom increase at least by a power of three of the flow’s Reynolds number. This is a significant challenge for studying turbulent fluid flows coming out of a spray nozzle in an engine’s fuel injector, and a major bottleneck for astrophysicists trying to, for instance, study the role of turbulence in how stars burn or galaxies form.

The biggest increases in complexity primarily come from the small scales: as a simulation gets larger, it is increasingly difficult for researchers to solve the myriad small-scale interactions that happen as fluids are in motion. As a result, many scientists will fold in assumptions about fluid behavior at the small scales, which is for instance, common practice in weather models and a major source of uncertainty.

To gain accurate, meaningful data, many turbulence researchers focus individual direct numerical simulations on specific small-scale interactions, sacrificing system size, simulation time, or both in the interest of getting accurate results in a timely manner.

These simulations’ results can be collected into larger datasets, which is exactly what researchers need to effectively train neural networks. Deep neural networks, modeled after the human brain, can represent extremely complex patterns in an efficient manner. Researchers feed a neural network with a large amount of input data. From there, the system “learns” to find relationships and correlations in the data and is given feedback so it can adapt and improve as it goes.

By using a large amount of DNS results that are focused on smaller systems and lower Reynolds numbers, the researchers can train a neural network to find patterns in fluid motion and then extrapolate those patterns to predict behavior in much bigger systems that have higher Reynolds numbers. “By simulating lower Reynolds numbers and understanding the physics, we have access to all the quantities that we are interested in, and supercomputing has helped us reach slightly higher numbers in the last few years, so we now have databases that provide a range of Reynolds numbers that start to approach the ones you would see in naturally occurring systems,” Buaria said.

The team was able to use a solid foundation of low and moderate Reynolds numbers to train its neural network to predict interactions at large Reynolds numbers currently impossible to simulate.

HPC Centers Serve as One-Stop Shops for Innovative Computational Research

By successfully demonstrating its approach, the team aims to continue to refine its model moving forward. For Buaria, that will mean working closely with HPC centers to run its calculations on modern, heterogeneous HPC architectures more efficiently.

While artificial intelligence has become an increasingly popular topic, and AI-driven chatbots have piqued society’s interest, these technologies are still in their early days. For researchers like Buaria and his collaborators, HPC centers provide a place to work at the intersection of computational science approaches.

With regard to AI-based solution methods, centers like JSC offer innovative HPC systems that support the entire workflow, from data generation to training neural networks and their application in calculating new results. Additionally, researchers can work with experts dedicated not only to helping users solve their particular research question, but also finding best practices to help all researchers benefit from these new, promising technologies.

Buaria indicated that even with the help of HPC centers, researchers themselves are going to have to continue to modify traditional computational approaches in order to get the best returns from using AI methods. “There is no doubt that the field is going to keep growing,” he said. “We have so much data we can use to train these models, and we keep generating more all the time, meaning it will only get bigger over the next few years. The field will keep evolving in such a way that we, as researchers, will need to develop methods—whether it is building new networks or others—that can help us learn from all this data in a cohesive manner.”

-Eric Gedenk