RESEARCH HIGHLIGHTS

Our research highlights serve as a collection of feature articles detailing recent scientific achievements on GCS HPC resources.

Scientists Release Record-Setting AI Earth Observation Model

Research Highlight –

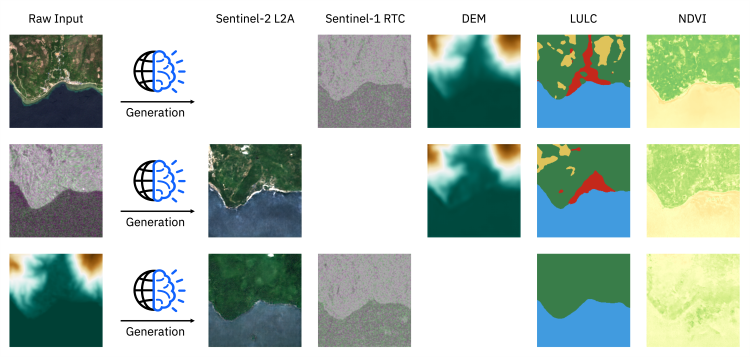

TerraMind enables any-to-any generation across modalities, producing consistent outputs from diverse input combinations. Image credit: Forschungszentrum Jülich and the ESA Φ-Lab.

As the field of high-performance computing (HPC) enters an era defined by the rise of generative artificial intelligence (AI), scientists are using it to develop so-called foundation models that support research within a given scientific domain or research focus.

As their name implies, foundation models offer a basis for scientists to modify and build on for their own research goals. One example is the FAST-EO project: Funded by the European Space Agency (ESA) Φ-lab, FAST-EO has developed a foundation model for Earth observation tasks. The work could have far-reaching implications for understanding the impacts of climate change and for estimating its effects on Earth observation applications.

As a partner in FAST-EO, the Jülich Supercomputing Centre (JSC) at Forschungszentrum Jülich contributes both AI expertise and respective hardware with the center’s JUWELS Booster supercomputer, to help develop the project’s flagship model – TerraMind.

“With TerraMind, we hope to have an impact in agriculture, forestry, and urban planning, but also in things like civil protections regarding flooding or other natural disaster observation and response,” said Dr. Rocco Sedona, researcher at JSC and collaborator on the project. “TerraMind helps us do Earth observation tasks much more rapidly, which helps inform people faster and with better information.”

HPC plays an essential role in foundation model training

Computer scientists primarily need two things to build a foundation model: a lot of data and a lot of computing power. This is because foundation models are essentially large-scale neural networks – a type of AI model that behaves similarly to how neurons exchange information in the human brain. They are trained with a large volume of data that starts off general and becomes increasingly specific. The resulting models can then be adapted easily for specific domains and tasks without the need for further large amounts of training data. Large language models such as ChatGPT were trained with hundreds of terabytes of written text, for instance. But for Earth observation, researchers use satellite imagery, elevation and other topographic data, and land-use maps. After compiling and organizing the relevant data, researchers give the model a task and provide feedback. This helps sharpen the model’s ability to generate an image, text, or weather forecast.

Researchers currently train AI models using both pixel- level and token-level datasets. Just like with photography, pixels represent individual pieces of raw data; tokens, on the other hand, are larger chunks of abstract data that represent a part of an image, section of text, or another object of interest for a given AI model. “Tokens help us to say what is represented within a dataset,” Sedona said. “In the case of Earth observation, that might be training the model to identify roads, airports, or even cornfields from satellite imagery, for instance.”

For TerraMind, the project collaborators used the JUWELS Booster supercomputer at JSC to train the model with over 500 billion tokens. Due to its GPUheavy architecture, the JUWELS system is well-suited for such large-scale AI training. The team harnessed that potential to achieve top performance on the PANGAEA benchmark, a newly developed set of metrics for Earth observation models. PANGAEA evaluates a model’s ability to correctly identify land cover, detect environmental changes, and perform other environmental monitoring tasks. TerraMind outperformed all previously evaluated models on PANGAEA.

“In order to train a model this large and complex, we need a resource like the JUWELS Booster,” Sedona said. “The actual training uses a lot of computing resources, but we also have a significant amount of hyperparameter tuning. To generate 500 billion tokens, we gathered millions of raw images that needed to be pre-processed and post- processed, plus the time spent breaking them down into tokens. These tasks would be impossible without a machine like JUWELS.”

Expanding the TerraMind foundation model supports larger research communities

While TerraMind has already outperformed other Earth observation models, the researchers have set their sights on expanding the model even further to improve performance and ultimately support even more research tasks.

JSC just inaugurated JUPITER, its newest flagship supercomputer. When fully operational, JUPITER will offer researchers roughly eight times as many GPUs as JUWELS. These GPUs are also tightly integrated with NVIDIA’s Grace CPU as part of the company’s GH200 Grace Hopper superchips.

“The GH200 superchips will be a big boost for working on this model," Sedona said. These superchips also have more memory, meaning that we will be able to use much more efficient algorithms than we currently have in place." While many AI researchers have significant experience using traditional large-scale HPC systems, others have limited experience using a system as powerful and complex as JUPITER. Sedona noted that while raw computing power is essential for the team to train a model like TerraMind, HPC centers like JSC also provide AI researchers with a variety of valuable services for training foundation models – JSC combines the expertise, raw computing power, and data infrastructure necessary to efficiently train a generative AI model. As part of JUPITER’s installation, JSC has built a new Modular Data Centre (MDC), which houses dedicated storage systems and provides project collaborators with more efficient data management options.

Additionally, Sedona indicated that the project team wants to make TerraMind as open and easy-to-use as possible. “We believe in the principles of open science,” he said. “This is publicly funded research, and we want to be open with what our model can achieve and make it available to as many people as possible.”

-Eric Gedenk

The team has a pre-print publication available on arXiv.org: https://arxiv.org/pdf/2504.11171.

For more information about TerraMind, you can also visit the FAST-EO website, https://www.fast-eo.eu/.

Funding for JUWELS was provided by the Ministry of Culture and Research of the State of North Rhine-Westphalia and the German Federal Ministry of Education and Research through the Gauss Centre for Supercomputing (GCS).

This article originally appeared in the autumn 2025 issue of InSiDE magazine.