ENGINEERING AND CFD

Superstructures in Turbulent Thermal Convection

Principal Investigator:

Detlef Lohse (1, 2), Richard Stevens (2)

Affiliation:

(1) Max-Planck-Institut für Dynamik und Selbstorganisation, Göttingen (Germany), (2) Max Planck Center Twente for Complex Fluid Dynamics and Physics of Fluids Group, University of Twente (The Netherlands)

Local Project ID:

pr74sa

HPC Platform used:

SuperMUC of LRZ

Date published:

Introduction

A tremendous variety of physical phenomena involve turbulence, such as bird and airplane flight, propulsion of fish and boats, sailing, and even galaxy formation. Computational fluid dynamics uses numerical methods and algorithms to analyze such turbulent flows. Turbulent flow is characterized by chaotic swirling movements that vary widely in size, from sub-millimeters, over the extent of storm clouds, to phenomena on a galactic scale. The interaction of the chaotic movements on different scales makes it very challenging to simulate and understand the underlying geometric structures within turbulent flows. At the same time understanding these fundamental patterns is crucial for industry to optimize a wide range of applications.

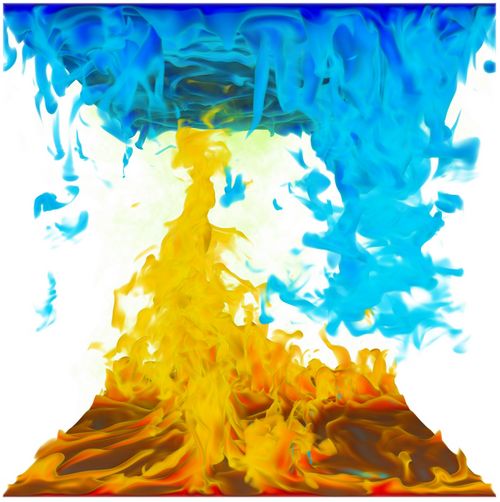

Turbulent thermal convection is a class of turbulent flows in which temperature differences drive the flow. Prime examples are convection in the atmosphere, the thermocline circulation in the oceans, heating and ventilation in buildings, or in industrial processes. The model system for turbulent thermal convection is Rayleigh-Bénard flow in which a layer of fluid is heated from below and cooled from above. The temperature difference between the two plates leads to the formation of a large-scale flow pattern in which hot fluid moves up on one side, while cold fluid moves down on the other side of the cell, see figure 1. The beauty of the Rayleigh-Bénard system is that it is mathematically very well defined. This feature allows direct comparison of simulation results with theory, and one-to-one comparison with state of the art laboratory experiments. As a result, for more than a century, Rayleigh-Bénard convection has been the perfect playground to develop novel experimental and simulation techniques in fluid dynamics that enable a better understanding of underlying turbulent flow structures [2,3].

Results and Methods

According to the classical view on turbulence, strong turbulent fluctuations should ensure that the effect of the system geometry on the turbulent flow structures is minimal in highly turbulent flows as the entire phase space is explored statistically This view justifies the use of small aspect ratio, i.e. small horizontal length compared to its height, domains when studying very turbulent flows. This massively reduces the experimental or simulation cost to reach the high Rayleigh number, which indicates the dimensionless temperature difference between the bottom and top plate, regime relevant for industrial applications and astrophysical and geophysical phenomena. Therefore, in a quest to study Rayleigh-Bénard convection at ever increasing Rayleigh, most experiments and simulations have focused on small aspect ratio cells in which the horizontal domain size is small compared to its height. This approach has resulted in major developments in our understanding of heat transfer in turbulent flows.

However, while heat transfer in industrial applications occurs in confined systems, many natural instances of convection take place in horizontally extended systems. Previous experiments and simulations at relatively low Rayleigh have shown that large scale horizontal flow patterns can emerge. Until now it was unclear what happens at very high Rayleigh when the flow in the bulk of the domain becomes fully turbulent. Motivated by major advances in computational capabilities of supercomputers like SuperMUC, we set out to perform unprecedented simulations for very large aspect ratio Rayleigh-Bénard convection at high Rayleigh.

In order to achieve this, we performed large-scale simulations of turbulent thermal convection using an in-house developed second order finite difference flow solver. Our code is written in Fortran 90 and large-scale parallelization is obtained using a two-dimensional domain decomposition, which is implemented using Message Passing Interface (MPI). On SuperMUC we performed simulations on grids with up to 60x109 computational nodes using up to 16 thousand computational cores. A snapshot of the entire flow field requires up to 1.7 terabytes, while the entire database we generated is over 1 petabyte. The generated database allows us to perform advanced flow field analysis unlocking detailed flow characteristics. This has, for example, allowed us to analyze the analogies and differences in the energy-containing flow structures found in pressure and thermally driven wall-bounded flows. In order to achieve this, we had to perform many simulations for which long term averaging was required to obtain the required statistical convergence. As a result, the simulations in this project required over a hundred million computational hours. The developed code has been made available to the fluid dynamics community at www.afid.eu and can also be used to simulate other canonical flow problems such as channel, Taylor Couette, and plane Couette flow [4].

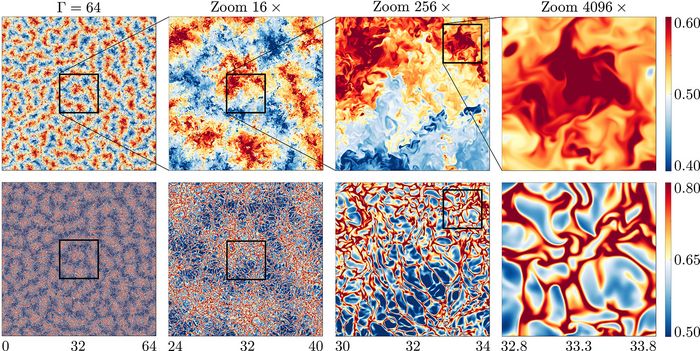

Figure 2: Snapshots of the temperature field in a horizontal domain that is 64 times as long as the domain height. The columns from left to right show successive zooms of the area indicated in the black box. The lower panels show the flow structure close to the bottom plate where small hot thermal plumes carry away the heat, while the upper panels reveal the large-scale flow patterns formed in the center of the domain. © MPI Göttingen and MPC Twente

Our simulation campaign [5] reveals that, in contrast to views from classical theories, turbulent thermal superstructures survive in fully turbulent flows, see figure 2. It turns out that these thermal superstructures have a profound influence on the heat transfer. An intriguing, and still unexplained, result is that the heat transfer becomes independent of the system size before the flow structures become independent of the system geometry. Explaining this intriguing phenomenon will require further research. We hope that the discovery of these thermal superstructures will allow us to gain better insight into the mechanism that drives large scale flow organization in astrophysical and geophysical systems such as cloud formation in the Earth’s atmosphere.

On-going Research / Outlook

SuperMUC allowed us to perform unprecedented simulations of turbulent thermal convection. Computer simulations of such turbulent flows are notoriously computationally demanding due to the large range of length and time scales that needs to be resolved. Therefore, such groundbreaking simulations can only be performed on the largest supercomputers in the world, such as SuperMUC. In addition, our simulations were only made possible due to algorithmic developments aimed at limiting the communication between different computational tasks. This improved the computational efficiency of our code and ensures good parallel efficiency on a large number of processors. Long term storage and data accessibility are assured by using the open source HDF5 data format.

Even with the massive computational and storage facilities offered by SuperMUC, it is still impossible to consider all physically relevant flow problems. For instance, a question of crucial importance is what happens at even stronger thermal forcing than can be simulated currently. For strong enough thermal forcing, one namely expects an ultimate state of thermal convection. It is conjectured that this ultimate regime is triggered when the boundary layers close to the plate become turbulent. Simulating ultimate thermal convection will require immense computational resources and will only become possible using a new generation of supercomputers like SuperMUC-NG.

Research Team

Alexander Blass2, Detlef Lohse (PI)1, 2, Richard Stevens2, Roberto Verzicco2, Xiaojue Zhu2

1 Max Planck Institute for Dynamics and Self-Organization, Göttingen (Germany)

2 Max Planck Center Twente for Complex Fluid Dynamics and Physics of Fluids Group, University of Twente (The Netherlands)

References and Links

[1] Group website: pof.tnw.utwente.nl; Project details: https://stevensrjam.github.io/Website/research_superstruc.html; Computational code: www.afid.eu;

[2] Ahlers, Grossmann, Lohse, Rev. Mod. Phys. 81, 503 (2009).

[3] R.J.A.M. Stevens, R. Verzicco, D. Lohse, J. Fluid Mech. 643, 495-507 (2010).

[4] E.P. van der Poel, R. Ostilla-Mónico, J. Donners, R. Verzicco, Computers & Fluids 116, 10 (2015).

[5] R.J.A.M. Stevens, A. Blass, X. Zhu, R. Verzicco, D. Lohse: Turbulent thermal superstructures in Rayleigh-Bénard convection, Phys. Rev. Fluids 3, 041501(R) April 2018 (https://journals.aps.org/prfluids/abstract/10.1103/PhysRevFluids.3.041501)

Scientific Contact

Prof. Dr. Detlef Lohse

Max-Planck-Institut für Dynamik und Selbstorganisation, Göttingen, Germany

Max Planck Center Twente for Complex Fluid Dynamics and Physics of Fluids Group, University of Twente, The Netherlands

e-mail: d.lohse [@] utwente.nl

LRZ project ID: pr74sa

November 2019